In recent years, neural networks have revolutionized fields like artificial intelligence (AI), machine learning (ML), and data science. They form the backbone of many modern technologies, from voice assistants to self-driving cars. This article delves into the basics of neural networks, explaining how they work, exploring their future potential, and providing a guide on how to learn more about them.

Fundamentals of Neural Networks

Neural networks are computational models inspired by the human brain. They consist of interconnected nodes or neurons organized in layers: input, hidden, and output layers. Each connection has an associated weight, and neurons have biases. These elements are adjusted through training to minimize error and make accurate predictions.

Key Concepts

- Neurons: The basic units of a neural network. Each neuron receives input, processes it, and passes it to the next layer.

- Layers: Neural networks are structured in layers. The input layer receives raw data, hidden layers process this data, and the output layer provides the final prediction.

- Weights and Biases: Weights determine the strength of the connection between neurons, while biases are additional parameters that allow models to better fit the data.

- Activation Functions: Functions that introduce non-linearity into the model, enabling it to learn complex patterns. Common activation functions include sigmoid, tanh, and ReLU (Rectified Linear Unit).

How Neural Networks Work

Neural networks learn by adjusting weights and biases based on the error of the output. This process is called training and typically involves the following steps:

- Forward Propagation: Data is passed through the network, layer by layer. Each neuron processes the data using an activation function, producing an output.

- Loss Calculation: The difference between the network’s output and the actual target value is calculated using a loss function. Common loss functions include Mean Squared Error (MSE) and Cross-Entropy Loss.

- Backward Propagation: The network adjusts its weights and biases to minimize the loss. This is done using algorithms like Gradient Descent, which calculates the gradient of the loss function with respect to each weight.

- Iteration: The forward and backward propagation steps are repeated iteratively on the training data until the model converges, meaning the loss is minimized to an acceptable level.

Future Aspects of Neural Networks

The future of neural networks is promising and filled with potential advancements and applications. Here are some key areas to watch:

- Advanced Architectures: Research is continuously producing new architectures like Convolutional Neural Networks (CNNs) for image processing, Recurrent Neural Networks (RNNs) for sequential data, and Generative Adversarial Networks (GANs) for generating new data.

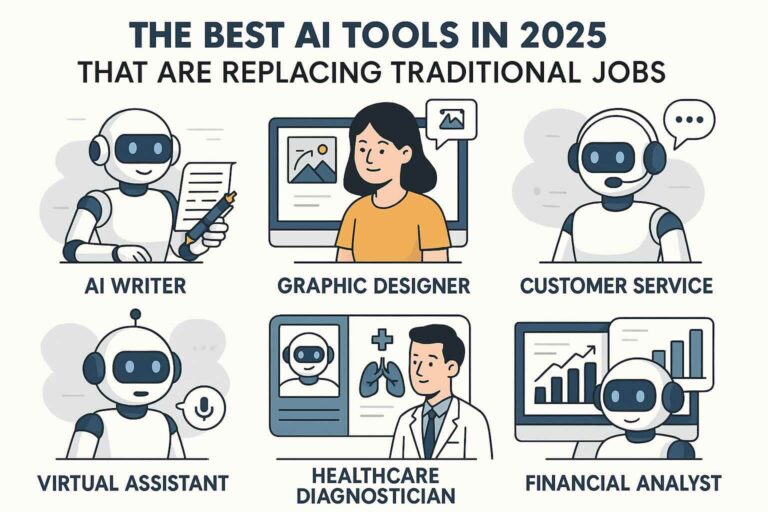

- AI and Automation: Neural networks will drive more sophisticated AI systems capable of performing complex tasks, leading to increased automation in various industries.

- Healthcare: Improved neural network models could revolutionize healthcare, enabling more accurate diagnosis, personalized treatment plans, and advanced medical research.

- Natural Language Processing (NLP): Enhanced models will improve the accuracy and capability of NLP applications, leading to better language translation, sentiment analysis, and human-computer interaction.

- Ethics and Fairness: As neural networks become more prevalent, ensuring ethical use and fairness in AI will be crucial. This includes addressing biases in data and ensuring transparency in AI decisions.

How to Learn More About Neural Networks

If you’re interested in diving deeper into neural networks, here are some steps to guide your learning journey:

1. Online Courses and Tutorials:

- Coursera: Offers comprehensive courses like Andrew Ng’s “Machine Learning” and “Deep Learning Specialization.”

- edX: Provides courses from top universities on neural networks and deep learning.

- Udacity: Features nanodegree programs in AI and deep learning.

2. Books:

- “Deep Learning” by Ian Goodfellow, Yoshua Bengio, and Aaron Courville: A thorough introduction to the field of deep learning.

- “Neural Networks and Deep Learning” by Michael Nielsen: An accessible online book that explains the concepts clearly.

3. Practical Experience:

- Kaggle: Participate in machine learning competitions and projects to gain hands-on experience.

- TensorFlow and PyTorch: Learn to use these popular frameworks by following official tutorials and building your own projects.

4. Research Papers and Journals:

- Stay updated with the latest advancements by reading papers from conferences like NeurIPS, ICML, and CVPR.

- Follow journals such as the Journal of Machine Learning Research (JMLR) and IEEE Transactions on Neural Networks and Learning Systems.

5. Communities and Forums:

- Engage with online communities like Reddit’s r/MachineLearning, Stack Overflow, and GitHub repositories to collaborate and seek advice from experts.

Conclusion

Neural networks are a fascinating and rapidly evolving field with the potential to transform numerous aspects of our lives. By understanding their fundamentals, recognizing their future potential, and actively pursuing further learning, you can be at the forefront of this technological revolution. Whether you’re a beginner or an experienced practitioner, there’s always more to explore and discover in the world of neural networks.