Large Language Models (LLMs) have transformed the landscape of Natural Language Processing (NLP) and artificial intelligence. These models, capable of understanding and generating human-like text, are used in a wide range of applications, from chatbots and virtual assistants to content creation and translation services. This article aims to provide an easy-to-understand overview of LLMs, how they work, and their applications.

What Are Large Language Models (LLMs)?

Large Language Models are sophisticated AI systems that use deep learning techniques to process and generate natural language. These models are “large” because they are trained on vast datasets containing billions of words and phrases, allowing them to learn a wide range of linguistic patterns and information.

Key Features of LLMs

1. Scale: Trained on extensive datasets, LLMs capture a broad spectrum of language patterns and knowledge.

2. Contextual Understanding: They use mechanisms to understand the context of text, enabling more accurate and relevant responses.

3. Versatility: LLMs can be fine-tuned for various specific tasks, making them highly adaptable.

How Do LLMs Work?

LLMs rely on an advanced architecture called transformers, introduced by Vaswani et al. in 2017. This architecture uses a mechanism known as attention to process text, which allows the model to weigh the importance of different words in a sentence. Here’s a step-by-step breakdown of how LLMs work:

1. Tokenization

Tokenization is the process of breaking down text into smaller units, such as words or subwords, that the model can process. Modern LLMs often use subword tokenization methods like Byte Pair Encoding (BPE) or SentencePiece to handle large vocabularies and rare words.

2. Pre-training

During pre-training, the model is exposed to a massive corpus of text data. This phase involves self-supervised learning tasks, such as predicting the next word in a sentence or filling in missing words. This process allows the model to learn grammar, facts about the world, and some reasoning abilities.

3. Fine-tuning

After pre-training, the model undergoes fine-tuning on a specific dataset tailored to a particular task, such as translation or sentiment analysis. Fine-tuning adjusts the model’s weights to enhance its performance for the target task.

4. Inference

During inference, the fine-tuned model generates predictions or responses based on new, unseen text inputs. It uses its learned knowledge to provide relevant and coherent outputs.

Applications of LLMs

LLMs have a wide range of applications across different industries. Here are some common uses:

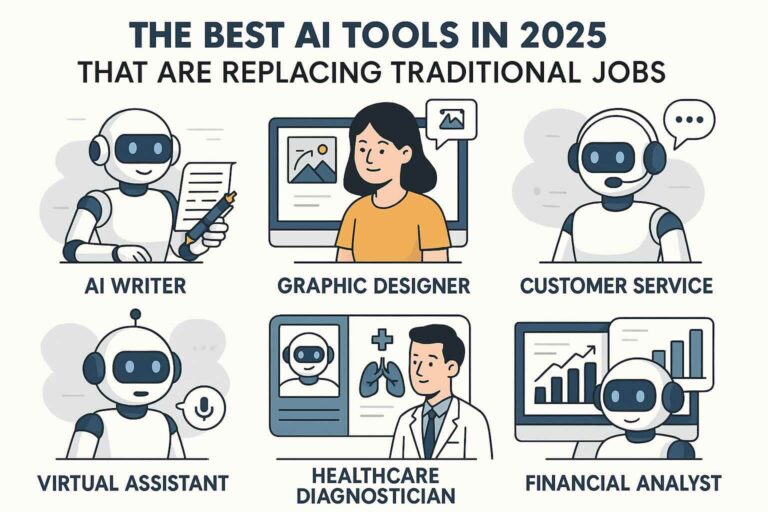

1. Chatbots and Virtual Assistants

LLMs power chatbots and virtual assistants like Siri, Alexa, and Google Assistant, enabling them to understand and respond to user queries naturally and contextually.

2. Content Creation

These models can generate human-like text for various purposes, including writing articles, creating marketing copy, and drafting emails.

3. Translation Services

LLMs are used in translation tools to convert text from one language to another while maintaining the original context and meaning.

4. Sentiment Analysis

Businesses use LLMs to analyze customer feedback and social media posts to gauge public sentiment and improve their products and services.

5. Healthcare

In healthcare, LLMs assist in processing and understanding medical records, generating patient reports, and providing decision support for clinicians.

Advantages of LLMs

1. High Accuracy: LLMs achieve high accuracy in various NLP tasks due to their extensive training on large datasets.

2. Flexibility: They can be fine-tuned for a wide range of specific applications.

3. Context Awareness: LLMs understand context better than traditional models, leading to more coherent and relevant outputs.

Challenges and Limitations

Despite their advantages, LLMs also face several challenges:

1. Computational Resources: Training and fine-tuning LLMs require significant computational power and memory.

2. Data Privacy: Using large datasets raises concerns about data privacy and security.

3. Bias: LLMs can inadvertently learn and propagate biases present in their training data.

Building Your Own LLM

Creating an LLM from scratch is a complex task, but using pre-trained models and fine-tuning them for specific tasks is more accessible. Here’s a simplified guide to building your own LLM:

Step 1: Set Up Your Environment

Install the necessary libraries and tools. You will need Python, along with libraries like Transformers and PyTorch.

pip install transformers torch datasetsStep 2: Choose a Pre-trained Model

Select a pre-trained model from the Hugging Face Transformers library.

from transformers import GPT2Tokenizer, GPT2LMHeadModel

tokenizer = GPT2Tokenizer.from_pretrained("gpt2")

model = GPT2LMHeadModel.from_pretrained("gpt2")Step 3: Prepare Your Dataset

Load and preprocess your dataset. You can use datasets from the Hugging Face library or your own data.

from datasets import load_dataset

dataset = load_dataset("wikitext", "wikitext-2-raw-v1")Step 4: Fine-tune the Model

Fine-tune the model on your dataset.

from transformers import Trainer, TrainingArguments

training_args = TrainingArguments(

output_dir="./results",

num_train_epochs=3,

per_device_train_batch_size=4,

save_steps=10_000,

save_total_limit=2,

)

trainer = Trainer(

model=model,

args=training_args,

train_dataset=dataset["train"],

eval_dataset=dataset["validation"],

)

trainer.train()Step 5: Evaluate the Model

Evaluate the model’s performance.

results = trainer.evaluate()

print(results)Step 6: Deploy the Model

Deploy the model for use in applications.

from transformers import pipeline

text_generator = pipeline("text-generation", model=model, tokenizer=tokenizer)

output = text_generator("Once upon a time,")

print(output)Conclusion

Large Language Models are powerful tools transforming how we interact with and leverage natural language data. Understanding their basics, applications, and how to build and fine-tune them can open up numerous possibilities for innovation and automation in various fields. As LLM technology continues to evolve, staying informed and experimenting with these models will be crucial for harnessing their full potential.